As Clouds Move Closer to the Edge, Networks Maximizing Their Value Will Share Their Cloud-Native DNA

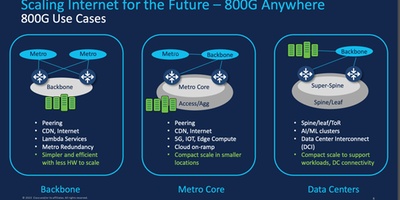

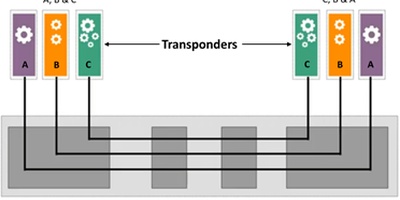

Cloud configurations are evolving with new instances materializing at network and premises edge locations at a rapid pace. For example, communications service providers (CSPs) are introducing new and innovative services by merging cloud-native workloads into the edges of their highly distributed networks. Similarly, businesses working on digital transformation are placing new and imaginative new applications into edge cloud implementations at the edges of their increasingly distributed sites.

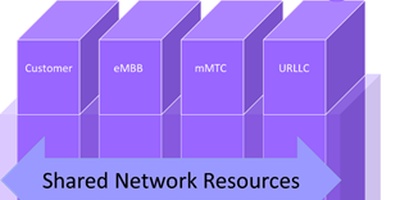

Local edge workloads are typically running in a compact edge cloud implementation, using container-based, micro-services software similar to the software running in more centralized, larger-scale clouds. These workloads are often designed with the expectation so they are functioning inherently, and to the greatest degree possible, seamlessly, in coordination with the high-level task flows and the operational intent of the organizations deploying them. This is true for a 5G networks coordinating its core and RAN operations, for MEC applications running to some extent locally at the edge and additionally in a larger cloud, and for enterprise apps whose work breakdown divides between a local edge site and its larger corporate operations.

One assumption embedded in all of these cases is that the network supporting the execution of the workloads is also working in seamless alignment with the operation of the apps and with the overall organization's objectives or its intent. To put it simply, this is much easier said than done.

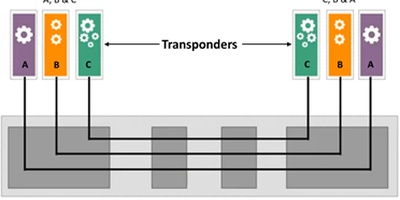

To first order, mirroring the requirements of workloads whose capacity and throughput varies significantly throughout a working day, as well as supporting the diversity of communication in the adjacent network infrastructures with which the edge cloud operation needs to align, requires a versatile network design supporting a number of powerful, yet simplifying capabilities. Additionally, having hundreds or in many cases (as with CSPs) thousands of distributed edge sites function in coordination with the larger ICT operations (both its networks and its clouds) requires a level of orchestration and life-cycle automation at scale supporting both edge and core that has not been readily available in the past, yet which is needed for distributed cloud operations moving forward.

Perceiving this as both challenge and opportunity, Nokia has applied considerable expertise in both virtualized, cloud-native data center operations and deployment of large-scale service provider networks and has worked closely with its CSP, cloud, and enterprise customers to understand these requirements clearly and develop an impressively complete and forward-looking solution to meet them. Called Adaptive Cloud Networking, the solution provides the ability:

- to merge customers’ service delivery intent to their deployments at scale,

- to integrate the operation of the network infrastructure with cloud software platforms it is supporting,

- to collect insights continuously from the network about the degree to which it is meeting its objectives, and

- to evolve continuously the operation of the network in an adaptation of the CI/CD DevOps techniques called NetOps, using the modular, microservices-based software design in its implementation from top to bottom, and from end to end.

It is a worthy new entry to the field of large scale, intent-based, software-driven cloud and data center networking usable in CSPs' and other distributed clouds.

For more information read ACG Research’s assessment of the edge networking solution that Adaptive Cloud Networking provides in this innovation spotlight brief: https://onestore.nokia.com/asset/212326

Contact Paul Parker-Johnson at pj@acgcc.com.