Will a Real-Time Digital Twin Be the Next Expert You Hire into Your Network Operations Team?

Practitioners in a variety of industries have been adopting real-time digital twins in their operations at an increasing rate over the past several years. Improvements in efficiency, certainty of decision-making, and quality of outcomes have been among the top motivators for the uptake. Use cases in manufacturing, transportation, public safety and health care have been demonstrating the value. Broader adoption has been made possible by reduced cost and increased performance in the hardware running twins, by increasing the ease of use of the software that is running them, and by increasing levels of comfort among practitioners in using a wide range of digital tools to help them in their work.

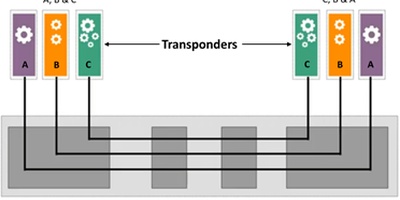

Network engineers have been exploring the use of digital twins embedded in their life-cycle management tool sets in various domains at the same time. The difference between off-line modeling tools (which we’ve had for many years) and embedded real-time digital twins is that the latter are meant to be active, accurate representations of the running state of the infrastructure for which they are a twin, and to be accessible for a wide range of applications and tasks in the life cycle of the work involved in supporting a network’s deployment.

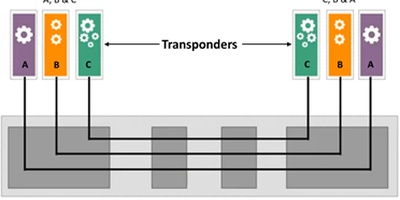

One might say that’s a tall order, especially if the network being managed has a diversity of node types, has a number of configuration layers to be cared for, and is connected to a range of adjacent systems and domains that have an influence on how they work. In response I would say, yes, but a manageable one, especially if the domain and the scope of deployment are clearly understood, and if the instances, protocols and APIs employed in embedding the twins are created using open, standardized technologies, and are implemented using workflows and mechanisms that fit well into the operational model of the domains.

A proof point for the value and feasibility of incorporating such a digital twin into the life cycle management platform for a network, in this case the for a cloud-native data center fabric, is the digital twin included in the Fabric Services System of Nokia’s Data Center Fabric offering (part of its Adaptive Cloud Networking portfolio, https://www.nokia.com/networks/data-center/adaptive-cloud-networking/).

The twin in is accessible by applications at every stage of life cycle management in a deployment. Day 0 designs can be refined by examining how they might impact the running network. Day 1 deployments can be accelerated by advanced planning on how the evolving network will look and behave. And ongoing operations in Day 2+ can be strengthened by comparing measurements and events with what the intended state of the network is. The speed and efficiency of task execution is accelerated at each of these life cycle stages, and the confidence of the network team and all of its stakeholders in the quality and reliability of the network infrastructure is improved.

To get a sense of some of the implementation’s key attributes and the results it can achieve, listen in to the conversation that Bruce Wallis, Pat McCabe, and I had about it in Part 5 of our video series on cloud-native architectures in data center fabric designs: https://bit.ly/3UZzrf8

Contact Paul Parker-Johnson at pj@acgcc.com for more information.