Tipping Point: Time to Buy In on Cloud-Native Data Center Fabrics

To excel in a cloud-native world our data center fabrics should be, well, cloud-native too, top to bottom, end to end

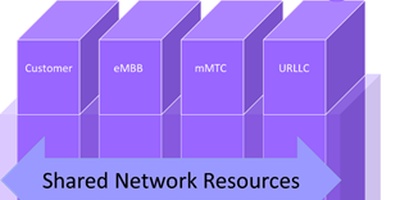

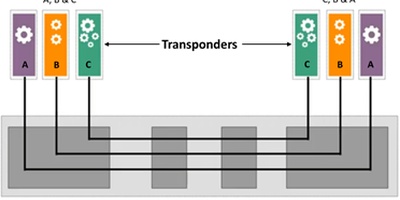

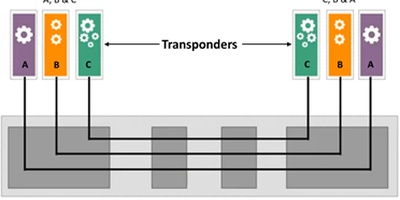

As an industry we’ve had a good amount of time (10+ years) to experiment, analyze, and work with network and computing designs that are more open, modular, software driven and deployable in a cloud-native mode than those we’ve used in the past. These designs borrow many of their principal features from hyperscaler implementations and are built using publicly available specifications and code (for example, gRPC, gNMI, OpenConfig, Linux-based NOS, container-based microservices, CI/CD, Kubernetes automation). In the past several years we have started to bet more frequently than we have previously that we can obtain significant, lasting benefits from adopting cloud-native designs. That said, approaches taken to date have tended to focus on modest pockets of implementation, often in a relatively cautious, incremental mode for generally good reasons. The technology at several layers of stack hasn’t been robust enough to support the levels of stability, manageability, and the range of deployment scenarios that operators have. Based on continued development, experimentation, and hard work at many layers of stack and in many domains, we have passed a tipping point in this journey. For most scenarios now, from core data centers to smaller edge sites, basing one’s strategy on a cloud-native fabric architecture is a viable, beneficial plan.

Following this path allows practitioners to break free from the constraints of bolt-on designs and moderately scoped enhancements that have held back the velocity of innovation that could be achieved using fully cloud-native modes to date. Now with a thoughtful and disciplined approach, one can start using cloud-native architectures as a pillar in deployment plans today. These choices will need to be unyielding in the long run to generate the best results. Embracing this model will make it possible to swap out hardware and software (including NOS and management tools) and extend implementations with independently developed functionality at each layer over the life of a solution (one can decide where to draw the line in openness and modularity wherever one sees fit).

These perspectives are not gentle in their downstream implications. Wider adoption of this paradigm (as we have seen in the white-box hardware and open NOS segments) will disrupt prior modes and the status quo, usher in new offerings and suppliers, and lead to a more fluid, adaptable market. It does not predispose success or failure for any one party. It simply introduces an element of fluidity and agility in suppliers’ and operators’ practices that will keep stakeholders firmly focused on what choices will create the greatest value and how they should be implemented.

While analyzing these dynamics in the broader market, during the past year I’ve had the opportunity to explore them in detail with the data center fabric leadership team at Nokia. Several years ago Nokia made the prescient decision to parlay its deep expertise in multiple engineering and networking disciplines (among them IP, MPLS, and Ethernet networking; network operating systems design; and automation of network life cycles at very large scale) into a fresh design for data center networks, grounded in cloud-native designs from the start. The resulting portfolio supports open, modular designs at every layer of stack and is usable in deployments from hyperscale DCs and distributed DC sites to compact edge computing nodes. The openness of the software implementation (in orchestration and life-cycle management and in the NOS) is a stellar example of building agility and flexibility into an elastic, distributed infrastructure for deployments at very large scale (thousands of nodes and thousands of sites working under commonly defined and orchestrated intent). It is a marquis example of how to leave technological impediments behind and open up innovation in support of future service deliveries. Appropriately, the portfolio is marketed under the banner, Adaptive Cloud Networking. Have a look at its characteristics: https://www.nokia.com/networks/data-center/adaptive-cloud-networking/

The Nokia team and I had the opportunity to discuss many of the architectural considerations involved in delivering such an agile portfolio to customers during this engagement. Bruce Wallis and Pat McCabe of Nokia’s data center fabric team and I delved into topics ranging from NetOps and CI/CD in network operations, to using network digital twins to accelerate and improve operations, to the types of automation that will be successful supporting operations moving forward as clouds become larger and more distributed. We broke the topics into seven video segments to make them consumable for a wide range of practitioners. Each is a reflection on what makes cloud-native design different from where we’ve been and why it’s a superior model to use moving forward. Have a look at any or all of those conversations at: https://www.nokia.com/blog/the-seven-building-blocks-critical-for-success-in-the-next-generation-of-telco-clouds/?did=D00000001216&utm_campaign=Data+Center+Network+Switching+2022&utm_source=linkedin&utm_

medium=organic&utm_term=697c62a4-373a-49d2-8d8f-5a0121279b9e

Stay tuned for posts on individual elements of these designs in coming days to add some additional context on their value.

For more information, contact Paul Parker-Johnson at pj@acgcc.com.